Analyzing Media Coverage of the 2015/2016 Refugee Crisis: What Natural Language Processing Reveals

This article examines how German media framed the 2015/2016 refugee crisis by applying Natural Language Processing techniques to over 15,000 articles from Bild and Spiegel Online. Using sentiment analysis, topic modeling, and named entity recognition, I reveal a clear decline in sentiment over time, especially after the Cologne New Year’s Eve incidents. The analysis shows how automated text processing can efficiently identify narrative shifts and provide quantitative evidence for media framing theories, while demonstrating the potential of AI-powered methods in political discourse analysis.

1 Analyzing Media Coverage of the 2015/2016 Refugee Crisis:

What Natural Language Processing Reveals

How did German media shape the narrative around refugees, and what can AI-powered text analysis teach us about analyzing media discourse?

1.1 Introduction

Large Language Models (LLMs) have dominated tech headlines since ChatGPT burst onto the scene, yet their capabilities extend far beyond generating text responses. Natural Language Processing (NLP) offers particularly promising applications in social science research, where analyzing large text corpora has traditionally been time-consuming and labor-intensive.

My bachelor thesis at the University of Hamburg examined how NLP methods can enhance political science research through a case study of media coverage during the 2015/2016 refugee crisis in Germany. This blog post shares key insights from that research, demonstrating how AI-powered text analysis can reveal patterns in media coverage that would be nearly impossible to detect through traditional methods.

My work focused on a central research question: How did the sentiment in German media coverage of refugees evolve during the 2015/2016 crisis? I was particularly interested in comparing coverage between two influential German news outlets with different editorial perspectives: Bild (a tabloid with center-right leanings) and Spiegel Online (SPON, a more liberal-leaning news magazine).

These specific outlets were selected based on their significant reach and different political orientations. Barthels et al. (2021) found that Bild was predominantly read by parliamentarians from more conservative parties (AfD, CDU, and FDP), while SPON was favored by representatives from more progressive parties (Greens, SPD, and Left Party). Notably, SPON maintained relatively high readership across the political spectrum, making it an ideal baseline for comparison with the more right-leaning Bild.

1.2 Methodological Framework: Combining NLP and Communication Theory

This study combined computational methods with established theories from communication science, particularly framing theory and agenda-setting theory. These theories provided the conceptual foundation for understanding how media shapes public discourse:

Framing theory (Entman 1993a; Scheufele and Engelmann 2016) examines how media selects and emphasizes certain aspects of issues, promoting particular interpretations and evaluations. Framing operates on multiple levels, influencing how communicators, texts, receivers, and culture interact to create meaning. As Entman (1993b) explains, frames select “some aspects of a perceived reality and make them more salient in a communicating text, in such a way as to promote a particular problem definition, causal interpretation, moral evaluation, and/or treatment recommendation.”

Agenda-setting theory (Entman 2003; McCombs and Shaw 1972) describes how media influences which topics gain public attention, effectively determining what issues people think about. McCombs and Shaw’s original hypothesis posited that “while the mass media may have little influence on the direction or intensity of attitudes, the mass media set the agenda for each political campaign, influencing the salience of attitudes toward the political issues.” This theory suggests that analyzing media coverage patterns can reveal how public attention is being directed.

Entman (2003) extended this concept through the “cascade model,” which describes how frames originate from political elites, flow through media outlets, and reach the public. This model is particularly relevant for understanding how refugee crisis narratives developed and spread through German media.

1.2.1 NLP Methods

To analyze over 15,000 news articles, I employed three primary NLP techniques:

Sentiment Analysis: Using the sentiment.ai package (Wiseman, Nydick, and Wisner 2022), I assigned each article a sentiment score between -1 (very negative) and 1 (very positive). This quantified the emotional tone of coverage and allowed me to track changes over time.

The model works by processing text at both document and sentence levels, analyzing contextual information, word choice, and syntactic structures. For this analysis, I used document-level sentiment to capture the overall tone of each article. This approach offers advantages over simpler lexicon-based methods, as it can better handle complex linguistic phenomena like negation, intensification, and contextual meaning.

Structural Topic Modeling (STM): Rather than using predetermined categories, STM (Roberts, Stewart, and Tingley 2019) algorithmically identified thematic clusters in the corpus. This approach revealed the latent topical structure of the coverage and allowed me to analyze sentiment changes within specific topics.

Unlike traditional topic modeling approaches such as Latent Dirichlet Allocation (LDA), STM can incorporate metadata (like publication source or publication date) to model how topic prevalence and content vary with document attributes. This makes it particularly suitable for comparing coverage between different media outlets and tracking changes over time.

For this analysis, I experimented with different numbers of topics and evaluated them based on coherence and interpretability. The optimal model identified four distinct topics that effectively captured the major themes in the corpus while minimizing overlap.

Part-of-Speech (PoS) Tagging: This technique identifies the grammatical roles of words in sentences, enabling extraction of named entities (like politicians) and analysis of how they were represented in the discourse.

PoS tagging goes beyond simple word counting by providing syntactic information about how words function in context. For example, it can distinguish between “refugee” as a subject versus an object in a sentence, revealing whether refugees are portrayed as actors or recipients of actions. This technique allowed me to identify relationships between entities, such as which politicians were frequently mentioned together or in opposition to each other.

1.3 Data Collection and Preprocessing

The dataset comprised 15,414 articles from Bild Online and Spiegel Online published between July 2015 and April 2016. Articles were collected through web scraping of the publications’ archives and filtered using relevant keywords related to refugees and migration, including “Flüchtling” (refugee), “Migration” (migration), “Asyl” (asylum), and “Integration” (integration).

Preprocessing steps included: - Tokenization (breaking text into individual words or tokens) - Removal of stopwords (common words with little semantic content) - Lemmatization (reducing words to their base forms) - Part-of-speech tagging and parsing (identifying grammatical structures)

These steps transformed the raw text into structured data suitable for computational analysis while preserving the semantic content needed for meaningful interpretation.

1.3.1 Mixed-Methods Approach

To validate the AI-generated results, I employed a mixed-methods approach that combined computational analysis with manual verification. For selected articles with particularly high or low sentiment scores, I conducted traditional qualitative content analyses to confirm that the automated sentiment ratings aligned with human interpretation.

This approach addresses a central challenge in using machine learning for research: the “black box” problem. As models become more complex, understanding why they produce certain results becomes increasingly difficult. The mixed-methods validation strategy provided greater confidence in the results while maintaining the efficiency benefits of automation.

I’ll enhance each section of the blog post to add more depth. Here’s the expanded version: yamlCopy— title: “Analyzing Media Coverage of the 2015/2016 Refugee Crisis: What Natural Language Processing Reveals” author: - name: “Lasse Rodeck” affiliation: “Universität Hamburg” email: “lasserodeck@hotmail.de” categories: [Refugee Crisis, Media Analysis, Natural Language Processing, Sentiment Analysis, Political Discourse] date: “2025-03-30” bibliography: “References/references.bib” image: Images/sentiment_timeseries.png format: html: smooth-scroll: true citations-hover: true footnotes-hover: true crossrefs-hover: true toc: true toc-expand: true toc_float: collapsed: true smooth_scroll: true toc-title: Contents anchor-sections: true editor: visual execute: error: false warning: false echo: false

abstract: | This article examines how German media framed the 2015/2016 refugee crisis by applying Natural Language Processing techniques to over 15,000 articles from Bild and Spiegel Online. Using sentiment analysis, topic modeling, and named entity recognition, I reveal a clear decline in sentiment over time, especially after the Cologne New Year’s Eve incidents. The analysis demonstrates how automated text processing can efficiently identify narrative shifts and provide quantitative evidence for media framing theories, while highlighting the potential of AI-powered methods in political discourse analysis. —

2 Analyzing Media Coverage of the 2015/2016 Refugee Crisis: What Natural Language Processing Reveals

How did German media shape the narrative around refugees, and what can AI-powered text analysis teach us about analyzing media discourse?

2.1 Introduction

Large Language Models (LLMs) have dominated tech headlines since ChatGPT burst onto the scene, yet their capabilities extend far beyond generating text responses. Natural Language Processing (NLP) offers particularly promising applications in social science research, where analyzing large text corpora has traditionally been time-consuming and labor-intensive.

My bachelor thesis at the University of Hamburg examined how NLP methods can enhance political science research through a case study of media coverage during the 2015/2016 refugee crisis in Germany. This blog post shares key insights from that research, demonstrating how AI-powered text analysis can reveal patterns in media coverage that would be nearly impossible to detect through traditional methods.

My work focused on a central research question: How did the sentiment in German media coverage of refugees evolve during the 2015/2016 crisis? I was particularly interested in comparing coverage between two influential German news outlets with different editorial perspectives: Bild (a tabloid with center-right leanings) and Spiegel Online (SPON, a more liberal-leaning news magazine).

These specific outlets were selected based on their significant reach and different political orientations. Barthels et al. (2021) found that Bild was predominantly read by parliamentarians from more conservative parties (AfD, CDU, and FDP), while SPON was favored by representatives from more progressive parties (Greens, SPD, and Left Party). Notably, SPON maintained relatively high readership across the political spectrum, making it an ideal baseline for comparison with the more right-leaning Bild.

2.2 Methodological Framework: Combining NLP and Communication Theory

This study combined computational methods with established theories from communication science, particularly framing theory and agenda-setting theory. These theories provided the conceptual foundation for understanding how media shapes public discourse:

Framing theory (Entman 1993a; Scheufele and Engelmann 2016) examines how media selects and emphasizes certain aspects of issues, promoting particular interpretations and evaluations. Framing operates on multiple levels, influencing how communicators, texts, receivers, and culture interact to create meaning. As Entman (1993b) explains, frames select “some aspects of a perceived reality and make them more salient in a communicating text, in such a way as to promote a particular problem definition, causal interpretation, moral evaluation, and/or treatment recommendation.”

Agenda-setting theory (Entman 2003; McCombs and Shaw 1972) describes how media influences which topics gain public attention, effectively determining what issues people think about. McCombs and Shaw’s original hypothesis posited that “while the mass media may have little influence on the direction or intensity of attitudes, the mass media set the agenda for each political campaign, influencing the salience of attitudes toward the political issues.” This theory suggests that analyzing media coverage patterns can reveal how public attention is being directed.

Entman (2003) extended this concept through the “cascade model,” which describes how frames originate from political elites, flow through media outlets, and reach the public. This model is particularly relevant for understanding how refugee crisis narratives developed and spread through German media.

2.2.1 NLP Methods

To analyze over 15,000 news articles, I employed three primary NLP techniques:

Sentiment Analysis: Using the sentiment.ai package (Wiseman, Nydick, and Wisner 2022), I assigned each article a sentiment score between -1 (very negative) and 1 (very positive). This quantified the emotional tone of coverage and allowed me to track changes over time.

The model works by processing text at both document and sentence levels, analyzing contextual information, word choice, and syntactic structures. For this analysis, I used document-level sentiment to capture the overall tone of each article. This approach offers advantages over simpler lexicon-based methods, as it can better handle complex linguistic phenomena like negation, intensification, and contextual meaning.

Structural Topic Modeling (STM): Rather than using predetermined categories, STM (Roberts, Stewart, and Tingley 2019) algorithmically identified thematic clusters in the corpus. This approach revealed the latent topical structure of the coverage and allowed me to analyze sentiment changes within specific topics.

Unlike traditional topic modeling approaches such as Latent Dirichlet Allocation (LDA), STM can incorporate metadata (like publication source or publication date) to model how topic prevalence and content vary with document attributes. This makes it particularly suitable for comparing coverage between different media outlets and tracking changes over time.

For this analysis, I experimented with different numbers of topics and evaluated them based on coherence and interpretability. The optimal model identified four distinct topics that effectively captured the major themes in the corpus while minimizing overlap.

Part-of-Speech (PoS) Tagging: This technique identifies the grammatical roles of words in sentences, enabling extraction of named entities (like politicians) and analysis of how they were represented in the discourse.

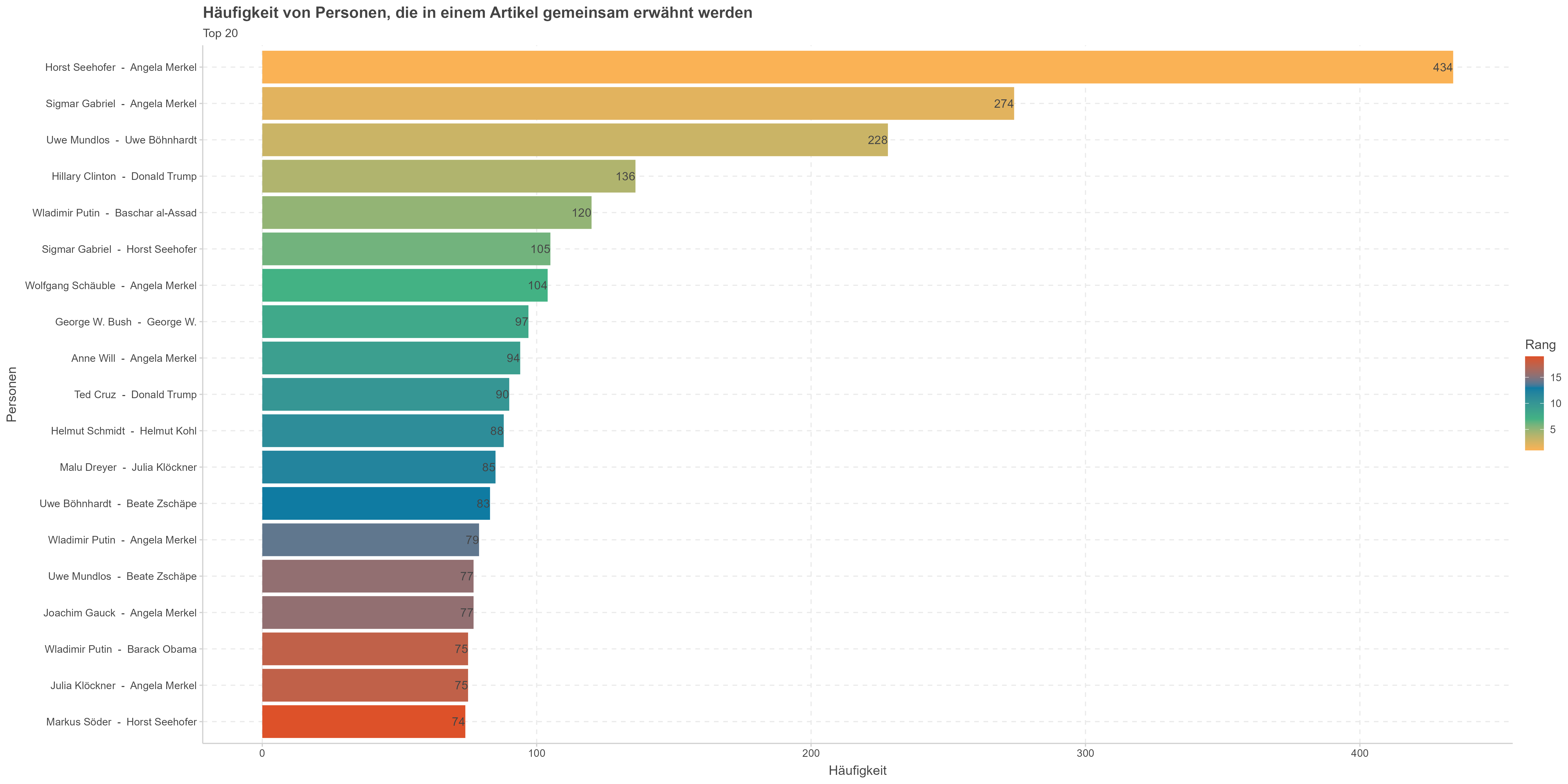

PoS tagging goes beyond simple word counting by providing syntactic information about how words function in context. For example, it can distinguish between “refugee” as a subject versus an object in a sentence, revealing whether refugees are portrayed as actors or recipients of actions. This technique allowed me to identify relationships between entities, such as which politicians were frequently mentioned together or in opposition to each other.

2.2.2 Data Collection and Preprocessing

The dataset comprised 15,414 articles from Bild Online and Spiegel Online published between July 2015 and April 2016. Articles were collected through web scraping of the publications’ archives and filtered using relevant keywords related to refugees and migration, including “Flüchtling” (refugee), “Migration” (migration), “Asyl” (asylum), and “Integration” (integration).

Preprocessing steps included: - Tokenization (breaking text into individual words or tokens) - Removal of stopwords (common words with little semantic content) - Lemmatization (reducing words to their base forms) - Part-of-speech tagging and parsing (identifying grammatical structures)

These steps transformed the raw text into structured data suitable for computational analysis while preserving the semantic content needed for meaningful interpretation.

2.2.3 Mixed-Methods Approach

To validate the AI-generated results, I employed a mixed-methods approach that combined computational analysis with manual verification. For selected articles with particularly high or low sentiment scores, I conducted traditional qualitative content analyses to confirm that the automated sentiment ratings aligned with human interpretation.

This approach addresses a central challenge in using machine learning for research: the “black box” problem. As models become more complex, understanding why they produce certain results becomes increasingly difficult. The mixed-methods validation strategy provided greater confidence in the results while maintaining the efficiency benefits of automation.

2.3 Historical Context: The 2015/2016 Refugee Crisis

To provide context for the findings, I compiled a timeline of pivotal events during the crisis:

Several key moments shaped the discourse:

July 4, 2015: The Alternative for Germany (AfD) party undergoes a leadership change, with Frauke Petry replacing Bernd Lucke as chairperson, signaling the party’s shift further to the right. According to Weiland (2015), this leadership change marked a turning point where “the moderates in the AfD, who originally joined the party out of concern for euro policy, have been outplayed,” indicating the party’s rightward trajectory.

August 31, 2015: Chancellor Angela Merkel delivers her famous “Wir schaffen das” (“We can do this”) speech at the Federal Press Conference, projecting optimism about Germany’s capacity to handle the influx of refugees. Interestingly, as Gathmann and Weiland (2015) noted, Spiegel did not directly quote this phrase in its initial coverage, though it became a defining slogan for Merkel’s refugee policy.

September 3, 2015: Publication of the photo of Alan Kurdi, a three-year-old Syrian boy who drowned in the Mediterranean Sea, sparking international outrage and empathy. Spiegel (2015c) reported that this image generated profound emotional responses globally.

September 6, 2015: Germany opens its borders to refugees stranded in Hungary, effectively opening the “Balkan route.” Volunteers welcome refugees at train stations across Germany. Spiegel (2015b) described scenes of applause as refugees arrived at German train stations.

September 7, 2015: Additional financial aid for municipalities is announced. Spiegel (2015e) reported that while municipalities would receive more funding to support refugees, asylum rules would simultaneously be tightened.

September 29, 2015: The first “Asylum Package” is passed, introducing stricter regulations for asylum seekers, including faster deportation procedures, restrictions on benefits, and designation of additional “safe countries of origin.” Spiegel (2015a) outlined how these changes aimed to expedite asylum procedures and reduce incentives for economic migration.

November 13, 2015: ISIS terrorist attacks in Paris kill over 120 people, intensifying concerns about security risks associated with migration. Lüdke et al. (2015) covered the attacks, which occurred at multiple locations including the Bataclan concert hall.

December 11, 2015: “Flüchtling” (refugee) is declared Word of the Year by the Society for German Language, reflecting its dominance in public discourse. Spiegel (2015d) noted that the word was selected because it had accompanied political, economic, and social life in a special way linguistically.

December 31, 2015 (New Year’s Eve): Mass sexual assaults in Cologne and other German cities, with perpetrators described by police as being “of North African and Arab appearance,” become a pivotal moment in the discourse. Diehl et al. (2016) reported that the incidents were not widely covered until several days later, with social media discussions intensifying as more details emerged.

January 12, 2016: “Gutmensch” (do-gooder) is declared Non-word of the Year 2015, highlighting the increasingly contentious discourse around refugee assistance. Spiegel (2016d) explained that the term was used to disparage those who volunteered to help refugees or opposed attacks on refugee shelters.

February 3, 2016: The second “Asylum Package” is implemented, restricting family reunification, classifying more countries as “safe countries of origin,” and requiring asylum seekers to pay for integration courses. Spiegel (2016a) reported that these changes were adopted after lengthy internal disputes within the coalition government.

February 18, 2016: In Clausnitz, a xenophobic mob blocks a bus carrying refugees, chanting “We are the people!” Spiegel (2016b) described how protesters prevented the bus from proceeding and how police forcibly removed refugees from the vehicle.

March 18, 2016: The EU-Turkey agreement is implemented, aimed at stopping irregular migration from Turkey to Europe in exchange for financial aid and visa liberalization. Spiegel (2016c) detailed how the agreement would return refugees who reached Greece via Turkey back to Turkey, while the EU would provide Turkey with financial assistance and concessions on visa requirements.

March 22, 2016: ISIS terrorist attacks in Brussels kill at least 31 people, further influencing the security dimension of the migration debate.

This chronology highlights how the refugee crisis discourse evolved from humanitarian concerns to security issues and political polarization. It provides essential context for interpreting the sentiment patterns observed in the media analysis.

2.4 Findings: The Shifting Media Narrative

2.4.1 Overall Sentiment Trend

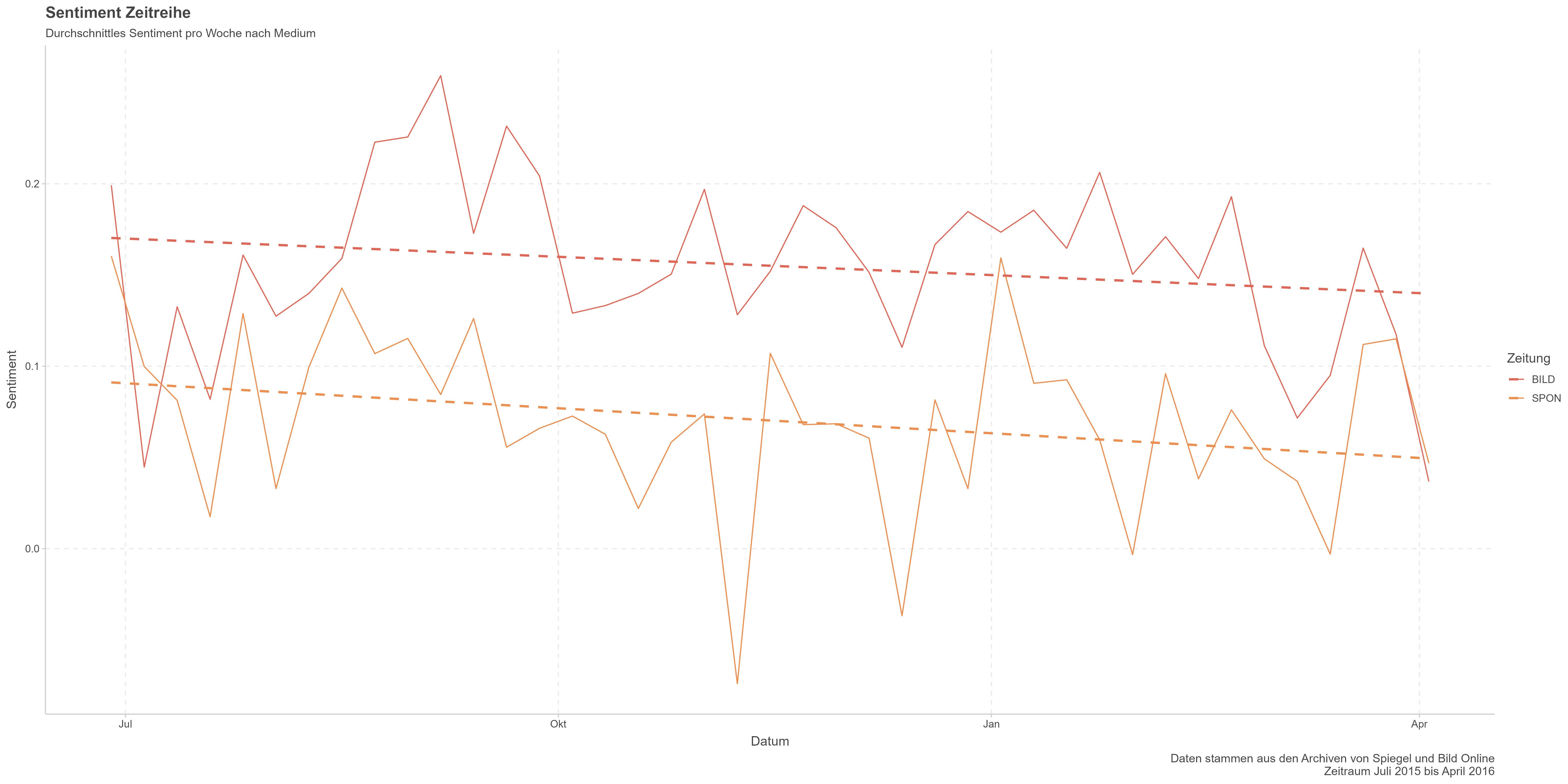

The sentiment analysis revealed a clear downward trend over time, particularly after the Cologne incidents:

Both publications showed a declining sentiment trajectory, but Bild experienced a more dramatic shift. While the summer of 2015 began with relatively positive coverage (sentiment values around 0.18 for Bild and 0.15 for SPON), by April 2016, the sentiment had significantly declined, with Bild’s average sentiment dropping by approximately 30%.

This decline was not constant but showed distinct phases: 1. Initial positivity (July-September 2015): Coverage emphasized humanitarian aspects and “Willkommenskultur” 2. Gradual decline (October-December 2015): Increasing focus on challenges and policy debates 3. Sharp decline (January-February 2016): Following the Cologne incidents, a pronounced negativity emerged 4. Stabilization at lower levels (March-April 2016): Sentiment remained negative but showed less volatility

The differential rate of decline between the two publications is particularly revealing. Bild’s more dramatic shift suggests greater editorial responsiveness to changing political winds or potentially more susceptibility to the influence of right-wing narratives, aligning with Barthels et al. (2021)’s observations about its readership demographics.

2.4.2 Topic Modeling Results

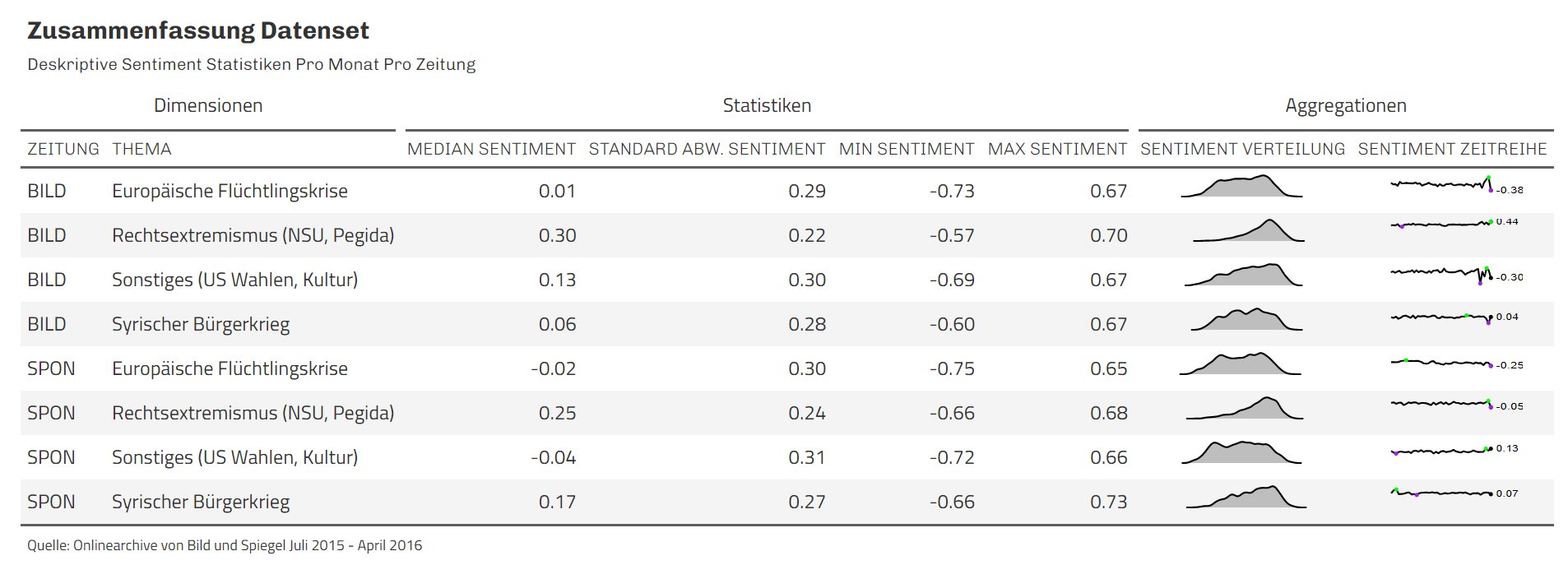

The STM analysis identified four distinct topics in the coverage:

Rechtsextremismus (Right-wing extremism): Coverage of far-right demonstrations, attacks on refugee shelters, and extremist movements like PEGIDA. Key terms included “rechts” (right), “demonstration” (demonstration), “pegida,” and “protest.”

Islamischer Terrorismus (Islamic terrorism): Articles about terrorist attacks, ISIS, and security concerns. This topic was characterized by terms like “anschlag” (attack), “terror,” “is” (ISIS), and “paris.”

Europäische Flüchtlingskrise (European refugee crisis): The core topic covering policy debates, refugee accommodations, integration efforts, and EU-level discussions. Prominent terms included “flüchtling” (refugee), “europa” (Europe), “grenze” (border), and “türkei” (Turkey).

Sonstiges (Miscellaneous): Various topics not fitting into the other categories, including cultural aspects and international developments.

The topic modeling revealed that articles were not distributed evenly across topics, with the “European refugee crisis” dominating the coverage. This dominance reflects the centrality of policy debates and border management issues in the media narrative.

The interrelationships between topics also provided insights into how the refugee discourse was structured. The “European refugee crisis” and “Islamic terrorism” topics showed increasing correlation over time, suggesting a growing association between migration and security concerns in the media framing. This association intensified particularly after the Paris attacks in November 2015.

2.4.3 Sentiment by Topic

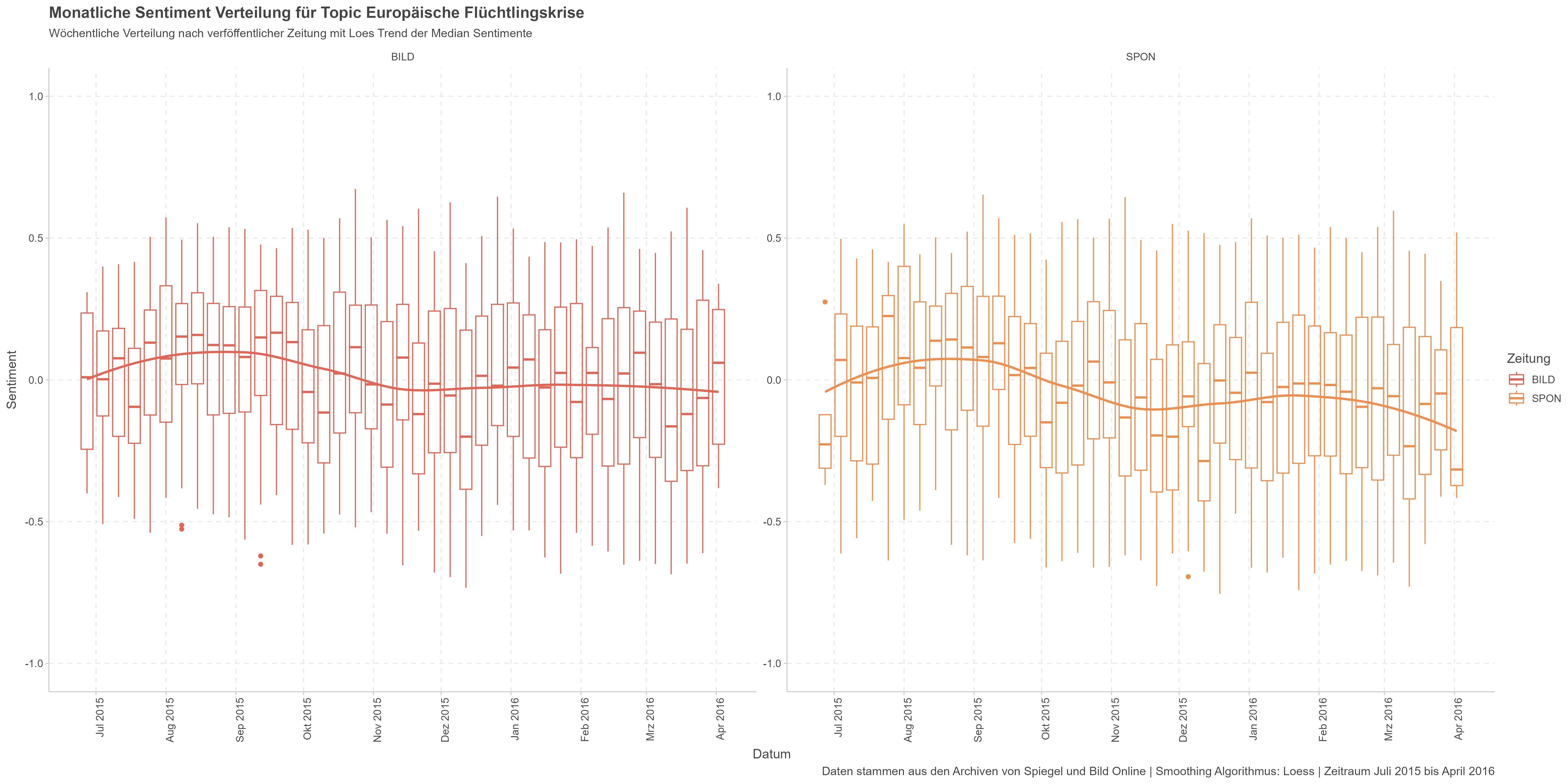

When analyzing sentiment by topic, the decline was most pronounced in the “European refugee crisis” category:

The sentiment drop for the “European refugee crisis” topic was particularly steep, suggesting that the core narrative about refugees and migration underwent the most significant shift. Other topics showed less dramatic changes, though Islamic terrorism coverage became increasingly negative in Bild’s reporting.

This differential pattern across topics reveals important nuances in how the refugee crisis was framed over time:

European refugee crisis: From July 2015 to April 2016, sentiment decreased dramatically for both publications (from approximately +0.2 to near zero or negative). This pattern indicates that the core refugee narrative shifted from broadly positive to neutral or negative, with the steepest decline occurring after the Cologne incidents.

Islamic terrorism: This topic started with negative sentiment values and remained negative throughout the period. Bild’s coverage showed a more pronounced negative trend than SPON’s, potentially reflecting different editorial approaches to security issues.

Right-wing extremism: Coverage of this topic maintained relatively stable sentiment values for both publications, suggesting that editorial stances toward right-wing extremism remained consistent despite changing attitudes toward refugees.

Miscellaneous: This category showed moderate declines in sentiment, particularly for Bild, indicating that the negative shift extended beyond obviously refugee-related content to affect broader coverage.

These patterns demonstrate how the refugee crisis narrative evolved from a humanitarian framing toward a problem-oriented framing, while coverage of associated topics like terrorism and extremism remained more consistent in their sentiment profiles.

2.4.4 Sentiment Distribution Analysis

Beyond the mean sentiment trends, I analyzed how the distribution of sentiment values changed over time:

Starting in November 2015, and accelerating after the Cologne incidents, there was greater polarization in the reporting. Spiegel’s minimum sentiment values dropped significantly, indicating more negative extremes in the coverage. The median sentiment also declined for both publications, showing that the negative shift wasn’t just due to a few outlier articles but represented a broader trend.

The box plots reveal several critical patterns:

Increasing variance: Both publications showed greater dispersion in sentiment values after December 2015, with wider interquartile ranges and more extreme outliers. This suggests that coverage became more diverse in tone, potentially reflecting a wider range of perspectives entering the discourse.

Asymmetrical expansion: The negative extremes expanded more dramatically than positive extremes, creating increasingly left-skewed distributions. This asymmetry indicates that while some positive narratives persisted, intensely negative framing became more common.

Differential patterns between outlets: While both publications showed increased variance, Spiegel maintained slightly higher median values throughout the period despite occasionally publishing more extremely negative articles. This suggests that Spiegel’s editorial approach remained somewhat more refugee-friendly overall, even as it incorporated more critical perspectives.

The changing distributions reflect how the discourse became more complex and controversial over time. The initial period showed relatively narrow sentiment distributions centered in positive territory, suggesting editorial consensus around a welcoming narrative. As the crisis progressed, this consensus fragmented into more diverse—and often more negative—perspectives.

2.4.5 Political Party Mentions

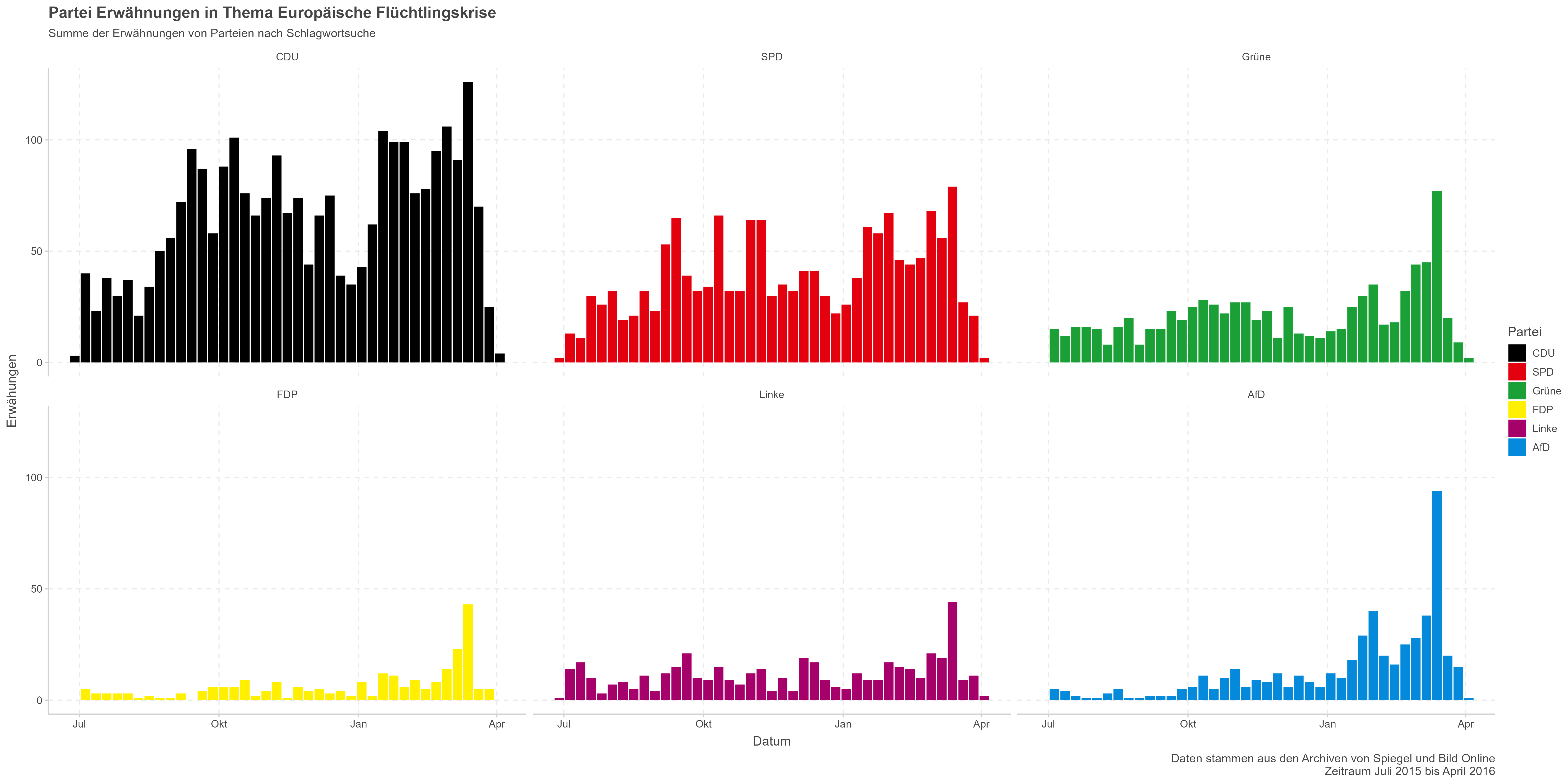

An intriguing pattern emerged when analyzing political party mentions in the coverage:

While all parties saw increased mentions toward the end of the observation period, the Alternative for Germany (AfD) experienced the most dramatic proportional increase. This coincided with the decline in sentiment and followed the party’s shift to a more right-wing orientation under Frauke Petry’s leadership.

Examining the specific patterns:

AfD: From relative obscurity in summer 2015, AfD mentions increased dramatically after the Cologne incidents, showing the highest proportional growth among all parties. This surge reflects how the party gained media prominence as it positioned itself as the primary critic of Merkel’s refugee policy.

CDU/CSU: As the governing party responsible for refugee policy, the CDU/CSU maintained high mention frequencies throughout the period, with modest increases toward the end as internal party divisions over refugee policy intensified.

SPD: As the junior coalition partner, the SPD received consistent coverage, with a moderate increase in mentions during the later period as policy debates intensified.

Opposition parties (Greens, Left Party, FDP): These parties saw modest increases in mentions, particularly after January 2016, reflecting how the refugee debate broadened to include more diverse political perspectives.

The increasing prominence of the AfD in media discourse supports Haller (2017)’s observation that opposition voices gained more attention as the crisis progressed, contributing to a more negative framing of the refugee situation. The timing of this increase—concentrated after the Cologne incidents—suggests that these events created a media opportunity structure that benefited critics of the government’s refugee policy.

2.4.6 Key Actors Analysis

Through entity extraction, I identified the most frequently mentioned individuals in the corpus:

Angela Merkel dominated the discourse with nearly 3,000 mentions, followed by other key political figures like Horst Seehofer (885 mentions), Thomas de Maizière (823 mentions), and Sigmar Gabriel (764 mentions). This reflects the central role of government officials in shaping the refugee crisis narrative.

Several patterns emerge from this actor analysis:

Government centrality: The top four most-mentioned individuals were all government officials (Merkel, Seehofer, de Maizière, Gabriel), indicating that the refugee discourse was primarily framed around official policy responses rather than refugee experiences or civil society perspectives.

Opposition representation: Opposition figures (including foreign leaders like Recep Tayyip Erdoğan and Viktor Orbán) received substantially fewer mentions, suggesting limited media space for alternative policy approaches.

Absence of refugee voices: Notably, no refugee representatives appeared among the most frequently mentioned individuals, indicating that refugees themselves were primarily objects rather than subjects in the discourse. This aligns with Haller (2017)’s criticism that refugee experiences were underrepresented in media coverage.

I also analyzed sentiment trends in articles mentioning Merkel across different topics:

The sentiment in Merkel-related articles showed varying patterns between the two publications. Both outlets showed a negative trend for the “Syrian Civil War” topic when mentioning Merkel. Interestingly, SPON articles mentioning Merkel in the context of the European refugee crisis became more negative over time, while Bild’s did not show this trend for that specific topic. This nuanced finding highlights the value of combining topic modeling with entity analysis.

When focusing on Merkel, several topic-specific patterns emerged:

European refugee crisis: SPON showed a clear negative trend in articles mentioning Merkel in this context, while Bild showed relatively stable sentiment. This divergence suggests different editorial approaches to evaluating Merkel’s refugee policy.

Syrian Civil War: Both publications showed declining sentiment when discussing Merkel in relation to Syria, potentially reflecting growing pessimism about diplomatic solutions to the conflict.

Miscellaneous: Bild’s coverage showed a pronounced negative trend in this category, suggesting a broader souring of its portrayal of the Chancellor beyond specifically refugee-related topics.

These patterns indicate that the media’s treatment of Merkel became increasingly complex and topic-dependent as the crisis progressed. While she remained the central figure in the discourse, her portrayal varied significantly across publications and topical contexts.

Beyond individual actors, I also analyzed co-mentions—instances where two individuals were mentioned in proximity within articles:

The most frequent co-mentions typically involved Chancellor Merkel with other political figures, reflecting her central role in the refugee crisis discourse. The visualization reveals not only the political networks that dominated the coverage but also how different actors were associated with each other in the media narrative.

Several prominent co-mention patterns emerged:

Government relationships: The most frequent co-mentions involved Merkel with key cabinet members (Seehofer, de Maizière, Gabriel), reflecting media focus on internal government dynamics and potential conflicts.

International dimensions: Co-mentions of Merkel with international leaders (Erdoğan, Hollande, Orbán) highlight the European and international framing of the crisis.

Political opposition: Co-mentions of AfD politicians (Petry) with government figures illustrate how the media increasingly positioned the AfD as the primary opposition to the government’s refugee policy.

Separate narrative clusters: Some co-mentions formed distinct clusters unrelated to refugee policy (e.g., NSU trial figures like Zschäpe, Mundlos, and Böhnhardt), indicating how multiple news narratives operated simultaneously during this period.

This network analysis provides insights into how media coverage constructed relationships between political actors, potentially influencing public perception of political alliances and conflicts during the crisis.

2.5 Validating AI Results: Manual Content Analysis

To validate the AI-generated sentiment scores, I conducted in-depth analyses of selected articles with extreme sentiment values:

2.5.1 Case Study 1: Negative Sentiment in Bild

I examined a Bild article titled “Menschen bei Maischberger: CSU-Politiker schließt Koalition mit AfD nicht aus” (December 9, 2015) that received a highly negative sentiment score (-0.6960).

The article covered a TV debate where CSU politician David Bendels called Merkel “part of the problem” and engaged in heated exchanges with Green politician Jürgen Trittin. The article not only reported on these confrontational exchanges but added its own negative tone through editorial commentary that mocked participants. For example, the article derided Bendels: “CSU politician Bendels laid it on thick twice: with his gel hairstyle and the federal political significance of the CSU.”

The article’s conclusion was particularly dismissive: “CSU politician Bendels had to add his two cents: ‘Chancellor Merkel is not in my party’ – No one would have come up with that on their own… That was a talk of the category: Time to switch off!”

A word-level sentiment analysis identified 23 negative and 15 positive terms, confirming the negative classification by the AI model. The manual analysis revealed that the negative sentiment emerged not only from the reported content (the TV debate) but also from the article’s own editorial voice, which amplified the confrontational aspects through mocking commentary and dismissive framing.

2.5.2 Case Study 2: Negative Sentiment in SPON

A Spiegel article titled “Oppermann ruft Gabriel zum Kanzlerkandidaten aus” (December 21, 2015) received a negative score (-0.7550) despite containing both positive and negative elements.

While the article included positive statements about SPD’s Sigmar Gabriel (describing him as “a successful fighting SPD chairman with much political substance” and “an excellent vice chancellor and a highly successful economics minister”), it also contained sharp criticism of the CDU/CSU and described the AfD as “a band of cynics and intellectual arsonists.”

This mix of positive praise for one party and severe criticism of others resulted in an overall negative sentiment classification. Interestingly, word-level analysis found 28 negative and 33 positive terms, highlighting the importance of contextual understanding in sentiment analysis. The model correctly identified that although positive terms slightly outnumbered negative ones, the overall context and the intensity of the negative characterizations created a predominantly negative tone.

The manual analysis revealed that the article’s negative sentiment stemmed primarily from: 1. References to internal SPD tensions despite Gabriel’s leadership 2. Sharp criticism of other political parties 3. Negative characterizations of the political climate surrounding the refugee crisis

These case studies confirmed that the AI sentiment model was capturing meaningful patterns in the discourse, though with some nuances that required careful interpretation. They demonstrated that sentiment analysis could effectively detect not only explicit sentiment expressed through emotion-laden vocabulary but also more subtle forms of negativity expressed through framing, context, and editorial voice.

2.6 Theoretical Interpretation: Framing, Agenda-Setting, and the Cascade Effect

My findings support key theories in media studies and provide quantitative evidence for their application to the refugee crisis coverage:

2.6.1 Framing Shifts

The sentiment analysis provides quantitative evidence for Haller (2017)’s observation that German media initially framed the refugee situation positively, promoting a “Willkommenskultur” (welcome culture) narrative. This framing shifted dramatically after the Cologne incidents, when opposition voices, particularly from the AfD, gained more prominence.

My analysis adds nuance to this understanding by showing:

- The shift began before the Cologne incidents but accelerated significantly afterward

- The change varied by topic, with the core refugee crisis narrative experiencing the most dramatic shift

- Different media outlets exhibited varying patterns in how they framed specific aspects of the crisis

The framing transformation can be characterized along multiple dimensions from Entman (1993b)’s framework:

Problem definition: Initially framed as a humanitarian challenge requiring collective effort (Merkel’s “We can do this”), the crisis was increasingly defined as a security, cultural, and economic problem.

Causal interpretation: Early framing emphasized war and persecution as causes of migration, while later coverage increasingly highlighted pull factors like Germany’s perceived openness.

Moral evaluation: The initial moral framing emphasized humanitarian obligation, while later coverage increasingly presented conflicting moral frameworks around security, cultural preservation, and economic sustainability.

Treatment recommendation: Policy recommendations shifted from facilitating integration to controlling borders and limiting migration.

These framing dimensions didn’t change uniformly across all coverage but evolved at different rates and with different intensities across outlets and topics, creating an increasingly complex media landscape.

2.6.2 The Cascade Effect

The cascade effect described by Entman (2003), where media outlets homogeneously adopt certain frames from political elites, appears visible in my data. Initially, media largely reflected the government’s positive stance on refugees, with Chancellor Merkel as the dominant voice in the discourse.

Later, as opposition voices gained traction, the media landscape became more diverse and increasingly negative. The rising prominence of AfD mentions in particular suggests that media outlets began amplifying alternative political perspectives as the crisis progressed.

The vertical cascade worked as follows:

Elite framing: Initially, government officials (particularly Merkel) established a humanitarian, solution-oriented frame that dominated coverage.

Media transmission: This framing was transmitted relatively homogeneously by major media outlets, with limited space for alternative perspectives.

Frame contestation: Following events like the Cologne incidents, opposition elites (particularly from the AfD) offered counter-frames emphasizing security and cultural concerns.

Media diversification: Media coverage gradually incorporated these alternative frames, creating a more heterogeneous and increasingly negative discourse.

This shift raises important questions about the relationship between media coverage and public opinion: Did the media’s framing shift in response to changing public sentiment, or did the media actively shape how the public perceived the refugee crisis? While my analysis cannot definitively answer this question, it provides a quantitative foundation for further exploration of these dynamics.

2.6.3 Agenda-Setting Dynamics

Beyond framing effects, my analysis reveals important agenda-setting patterns. The increasing prominence of AfD mentions and the growing focus on topics like security and cultural compatibility demonstrate how the media agenda shifted over time.

This agenda shift is particularly evident in the changing distribution of topics in the coverage. While the “European refugee crisis” topic remained dominant throughout the period, its internal composition evolved. Initial coverage emphasized logistics, accommodation, and integration, while later coverage increasingly focused on criminality, cultural differences, and policy failures.

This evolving agenda likely influenced not only what the public thought about (refugees) but also how they thought about this issue (increasingly in terms of problems rather than solutions). The agenda-setting effect was therefore not just about topic salience but about attribute salience—which aspects of the refugee situation received attention.

2.7 Methodological Reflections: Strengths and Limitations of AI-Powered Analysis

2.7.1 Interpretability Challenges

One significant challenge in working with ML and DL models for text analysis is interpretability. As models become more complex, understanding exactly why they produce certain results becomes increasingly difficult. This creates a “black box” problem where researchers may struggle to explain the reasoning behind AI-generated insights.

To address this challenge, I employed a mixed-methods approach that combined automated analysis with manual verification. This provided greater confidence in the results while maintaining the efficiency benefits of automation.

The interpretability issue exists on multiple levels:

Parameter interpretability: Complex models like neural networks involve millions of parameters that cannot be meaningfully interpreted individually, unlike simpler models like linear regression.

Feature importance: Understanding which textual features drive sentiment predictions can be challenging, especially when models consider contextual relationships between words.

Decision processes: The internal “reasoning” of models remains opaque, making it difficult to diagnose why certain articles receive particular sentiment scores.

For social science research, where explaining findings is as important as generating them, these interpretability challenges necessitate supplementary methods to validate and contextualize automated analyses.

2.7.2 Model Quality Considerations

The sentiment.ai model used in this study produced results that aligned well with human interpretation in the validation cases. However, it’s important to acknowledge that different models might produce different results, and the specific training data used to develop a model can influence its performance on particular topics.

Several model-related factors influenced the analysis:

Training data bias: Models trained primarily on certain types of text (e.g., product reviews or social media posts) may perform differently when applied to news articles.

Domain specificity: Language use in political discourse has unique characteristics that general-purpose models may not fully capture.

Contextual understanding: While modern models handle basic context well, they may struggle with complex rhetorical devices like sarcasm, implied meanings, or culturally specific references.

For future research, models specifically trained on news articles about political topics might offer even more accurate sentiment assessments. Additionally, developing models that can better account for contextual nuances, such as sarcasm or implied meaning, would further enhance the reliability of automated content analysis.

2.7.3 Data Limitations

While the corpus of 15,414 articles provided a robust foundation for analysis, several data limitations should be acknowledged:

Outlet selection: The analysis focused on only two publications, potentially missing important variations in how other media sources covered the crisis.

Text-only analysis: The study analyzed only textual content, excluding visual elements like images and videos that likely influenced how readers perceived the coverage.

Keyword filtering: Articles were selected based on specific keywords, potentially missing relevant articles that discussed refugees using different terminology.

Future studies could address these limitations by expanding the range of media sources, incorporating multimodal analysis of text and images, and employing more sophisticated methods for identifying relevant content.

2.8 Conclusion: The Promise of AI-Powered Discourse Analysis

This research demonstrates that automated text processing can efficiently analyze thousands of documents, identifying trends and patterns that would be impractical to detect manually. The analysis revealed:

- A clear decline in sentiment toward refugees over the observation period

- A particularly pronounced negative shift in the topic “European refugee crisis”

- Increasing prominence of opposition voices, especially the AfD, correlating with sentiment decline

- Varying patterns of sentiment change across different topics and publications

The mixed-methods approach—combining automated analysis with manual verification—proved effective in establishing confidence in the results while maintaining the efficiency benefits of AI-powered analysis.

2.8.1 Methodological Implications

Let me continue with the conclusion section:

2.8.2 Methodological Implications

This study demonstrates several key methodological advancements for political communication research:

Scalable discourse analysis: By combining automated processing with strategic manual validation, researchers can analyze much larger text corpora than traditional methods allow, enabling more comprehensive and representative studies of media content.

Multi-dimensional analysis: The integration of sentiment analysis, topic modeling, and entity recognition enables researchers to examine not just what is being discussed, but how it is framed, which actors are involved, and how these elements interact over time.

Quantification of framing shifts: The approach provides numerical measures for tracking changes in media framing, allowing more precise analysis of when and how narrative shifts occur in response to events or political developments.

Cross-publication comparison: The standardized methodology facilitates direct comparisons between different media outlets, revealing subtle differences in editorial approaches that might be difficult to capture through qualitative methods alone.

These methodological innovations open new possibilities for studying political communication across various contexts and topics, from climate change coverage to election campaigns to public health communication.

2.8.3 Theoretical Contributions

Beyond methodological advancements, this research contributes to communication theory in several ways:

Quantitative evidence for framing dynamics: The findings provide quantitative support for Entman (1993b)’s framing theory, demonstrating how problem definitions, causal interpretations, moral evaluations, and treatment recommendations evolved over time in refugee coverage.

Cascade model validation: The analysis offers empirical evidence for Entman (2003)’s cascade model, showing how frames initially flowed from government sources but later diversified as opposition voices gained prominence.

Temporal dimension of agenda-setting: The findings highlight how agenda-setting operates not just through topic selection but through dynamic shifts in topic composition and framing over time, suggesting a more complex model of media influence than classic agenda-setting theory proposed.

These theoretical contributions help refine our understanding of how media shapes public discourse, particularly during complex political crises where multiple stakeholders compete to define the narrative.

2.8.4 Implications for Media Literacy and Democracy

The findings have important implications for media literacy and democratic discourse:

Media literacy education: Understanding how media framing evolves during crises can help citizens become more critical consumers of news, aware of how narratives shift and which perspectives gain or lose prominence over time.

Editorial responsibility: For journalists and editors, the research highlights the importance of conscious framing decisions and the need to reflect on how certain events (like the Cologne incidents) can trigger rapid narrative shifts that may have lasting societal impacts.

Diverse voices in discourse: The relative absence of refugee voices in the coverage suggests a need for more inclusive approaches to reporting on crises that directly affect marginalized groups.

Political polarization dynamics: The increasing prominence of AFD mentions alongside declining sentiment illustrates how media coverage can both reflect and potentially amplify political polarization around contentious issues.

2.8.5 Future Research Directions

This study opens several promising avenues for future research:

Cross-national comparisons: Applying similar methods to analyze refugee crisis coverage across different countries could reveal how national political contexts influence media framing.

Social media integration: Combining traditional media analysis with social media discourse analysis could illuminate the relationship between mainstream media framing and public opinion expression.

Long-term framing effects: Extending the timeframe of analysis beyond 2016 could reveal whether the observed framing shifts had lasting impacts on refugee discourse or were temporary responses to specific events.

Multimodal analysis: Incorporating analysis of images and videos would provide a more complete picture of how the refugee crisis was represented in media.

Model refinement: Developing sentiment models specifically trained on political news content could enhance the accuracy and interpretability of automated analysis.

Causal relationship testing: Designing studies that can test for causal relationships between media framing and public opinion would further enhance our understanding of media effects.

2.8.6 Concluding Thoughts

The 2015/2016 refugee crisis represented a pivotal moment in recent European history, with far-reaching political implications that continue to resonate today. This research demonstrates how computational methods can provide insights into the media’s role in shaping public discourse during such critical periods.

By revealing the patterns and dynamics of sentiment evolution, topic distribution, and actor representation, this study contributes to our understanding of how media framing influenced the refugee debate. The clear shift from predominantly positive to increasingly negative coverage, coupled with the rising prominence of alternative political voices, illustrates the complex interplay between events, political actors, and media representation.

For researchers interested in discourse analysis, this approach offers tremendous potential. It enables quantitative analysis of large text corpora while maintaining the depth that qualitative verification provides. As these tools continue to develop, they will become increasingly valuable for understanding how media shapes public discourse on critical social issues.

Beyond the specific findings about the refugee crisis coverage, this study demonstrates a methodological framework that can be applied to analyze media coverage of any complex social or political issue. By combining computational methods with established communication theories, researchers can gain deeper insights into how media frames issues and sets agendas in public discourse.

This blog post is based on my bachelor thesis “Natural Language Processing in den Politikwissenschaften: Das Potenzial automatisierter Textanalysen am Beispiel der Flüchtlingskrise” completed at the University of Hamburg in 2024.